Study Guide

Quiz

- Describe the format and key details of the Databricks Data Engineer Associate exam, including the number of questions, question format, and passing score.

- Explain the purpose of the Medallion Architecture in a data lakehouse and briefly describe the state of the data in the Bronze and Silver tables.

- What are the fundamental differences between batch processing and stream processing as described in the source?

- What is a “Job cluster” in Databricks, and how does it differ from an “All-Purpose cluster”?

- According to the source, what is Unity Catalog and what are its main components?

- List and explain three key features of Delta Lake that contribute to building reliable data pipelines.

- What is the primary function of Databricks Repos (now called Git folders) and what types of Git providers does it support?

- Define what a Databricks Unit (DBU) is and its role within the Databricks Lakehouse platform.

- What are Delta Live Tables (DLT), and what key benefits do they offer for building ETL pipelines?

- Explain the three-level namespace used by Unity Catalog to organize data assets.

Quiz Answer Key

- The Databricks Data Engineer Associate exam (exam code PR000054) consists of 45 multiple-choice questions with no multiple-select options. The exam duration is 90 minutes (1.5 hours), and a passing grade of 70% is required. Due to the low number of questions, candidates can only afford to get 13 questions wrong.

- The Medallion Architecture is a multi-layered data architecture used to progressively refine data in a lakehouse. The Bronze table layer holds raw, semi-structured, or unclean ingested data, often used for archiving and reprocessing. The Silver table layer contains data that has been transformed, filtered, cleaned, deduplicated, and standardized, preparing it for analytics and machine learning.

- Batch processing involves sending collections of data (batches) to be processed on a scheduled basis, which is not real-time and is ideal for large, cost-efficient workloads. Stream processing, conversely, processes data as soon as it arrives, making it suitable for real-time analytics and streaming videos, though it is more expensive than batch processing.

- A Job cluster is a temporary cluster created specifically to run automated tasks like production jobs or ETL pipelines, and it shuts down automatically once the job is complete. An All-Purpose cluster, in contrast, is a shared, always-on environment designed for multiple users to collaboratively develop, test, and explore data interactively in notebooks or the SQL editor.

- Unity Catalog is a unified governance layer in Databricks for centrally managing access, auditing, and lineage of data and AI assets across workspaces. Its main components include the Metastore (the top-level container), Catalogs, Schemas (like databases), Tables, Views, Volumes (for non-tabular data), Functions, and ML Models.

- Delta Lake provides several key features for reliability. ACID transactions ensure that operations are atomic, consistent, isolated, and durable. Schema evolution allows for changes to a table’s structure without breaking existing pipelines. Time Travel lets users query older versions of data, enabling easy restoration or auditing of previous data states.

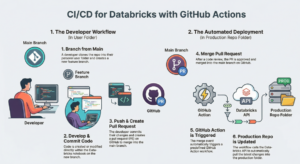

- Databricks Repos (Git folders) integrate with Git providers for version control, enabling collaboration directly within the Databricks workspace. They allow users to perform Git operations like cloning, committing, pushing, and pulling, and they support providers such as GitHub, GitLab, and Azure DevOps.

- A DBU, or Databricks Unit, is a normalized unit of processing power (a unit of compute) on the Databricks Lakehouse platform. The number of DBUs consumed by a workload depends on the compute resources used, allowing Databricks to measure and bill for processing usage across different instance types and services.

- Delta Live Tables (DLT) is a declarative framework that simplifies building batch and streaming ETL pipelines in Databricks. Key benefits include a unified approach for batch and streaming, automated orchestration and error recovery, built-in data quality checks via expectations, and visual monitoring with lineage tracking.

- Unity Catalog uses a three-level namespace to organize data, following standard SQL formats. The hierarchy consists of a

catalogat the top level, which contains multipleschemas(often equated to databases), and each schema, in turn, contains data assets liketablesandviews.

Essay Questions

- Compare and contrast the three primary table types available in Databricks Unity Catalog: Managed, External, and Foreign tables. Discuss their differences in terms of data storage location, management responsibility, read/write capabilities, and performance optimization potential.

- Describe the complete Medallion Architecture, detailing the purpose and characteristics of the Bronze, Silver, and Gold tables. Explain how data is transformed and refined as it moves through each layer of the lakehouse.

- Explain the concept of data lineage within Unity Catalog. How is it captured, what information does it provide, and why is it a critical feature for data governance and auditing in an enterprise environment?

- Discuss the role of Databricks Jobs in productionizing data pipelines. Describe how jobs can be used to run multiple dependent tasks, how they are scheduled using cron syntax, and what monitoring and alerting features are available for managing failures.

- Compare and contrast Databricks Repos (Git Folders) with native notebook versioning (like a standalone Jupyter Notebook). Focus on aspects like interface, collaboration features, repository management, and integration with advanced Git operations and CI/CD tools.

Glossary

| Term | Definition |

| ACID Transactions | A set of properties (Atomicity, Consistency, Isolation, Durability) that guarantee data validity despite errors or power failures. Delta Lake provides these guarantees for table operations. |

| All-Purpose Cluster | A shared, always-on Databricks cluster where multiple users can collaboratively develop, test, and explore data interactively. |

| Apache Spark | An open-source, distributed computing system used for big data processing and analytics. It is the core engine used by Databricks clusters. |

| Batch Processing | A method of processing data where a collection of data (a batch) is sent to be processed, typically on a schedule. It is cost-efficient and ideal for large workloads but is not real-time. |

| Catalog | In Unity Catalog, a top-level container for organizing data assets like schemas, tables, and views, often used to isolate business units or environments. |

| Cluster | A collection of virtual machines that run Spark, used to power notebooks, jobs, and pipelines. It consists of one driver and multiple worker nodes. |

| Data Corruption | The unintended alteration of data during its lifecycle, leading to errors or loss of integrity. |

| Data Integrity | The maintenance and assurance of data accuracy and consistency over its entire lifecycle. |

| Data Lake | A centralized storage repository that holds vast amounts of raw data in various formats (structured, semi-structured, unstructured) without a predefined schema. |

| Data Lakehouse | A data management architecture that combines the flexibility and cost-efficiency of a data lake with the data management and ACID transaction features of a data warehouse. |

| Data Mart | A subset of a data warehouse, typically under 100 GB, that is focused on a single business line or team. |

| Data Warehouse | A relational data store, often column-oriented, designed for analytic workloads and reporting on large volumes of data (terabytes and millions of rows). |

| Databricks Connect | A client library for the Databricks runtime that allows IDEs (like VS Code, PyCharm) and custom applications to connect remotely to Databricks compute clusters. |

| Databricks Repos / Git Folder | A feature in Databricks that integrates with Git providers (like GitHub) for version control, allowing collaborative development of notebooks and scripts directly in the workspace. |

| Databricks Runtime | A versioned environment that defines the core Spark engine, libraries, and ML stack used by a Databricks cluster. |

| DBU (Databricks Unit) | A normalized unit of compute processing power on the Databricks platform, used for billing and measuring workload consumption. |

| Delta Lake | The default table format in Databricks, which adds a transaction log to cloud storage to provide ACID transactions, schema enforcement, time travel, and other reliability features. |

| Delta Live Tables (DLT) | A declarative framework in Databricks for building reliable, maintainable, and testable batch and streaming data pipelines. |

| External Table | A table in Unity Catalog where the metadata is managed by Databricks but the underlying data is stored in an external cloud location (like S3 or ADLS) that is not managed by Databricks. Also known as an unmanaged table. |

| Foreign Table | A read-only table in Unity Catalog that represents data stored in an external system, connected via Lakehouse Federation. |

| Job Cluster | A temporary Databricks cluster created for a specific automated workload, such as a production job or ETL pipeline, which terminates upon job completion. |

| Managed Table | The default table type in Unity Catalog, where both the metadata and the underlying data are fully managed by Databricks. |

| Medallion Architecture | A data architecture pattern with three layers (Bronze, Silver, Gold) used to progressively refine and structure data in a lakehouse. |

| Metastore | The top-level container for metadata in Unity Catalog, which registers data assets and the permissions governing them. |

| Notebooks (Databricks) | Interactive environments that combine code (Python, SQL, Scala, R), documentation, and visualizations, ideal for ETL workflows and data analysis. |

| Photon | Databricks’ native vectorized query engine, written in C++, that accelerates Apache Spark workloads to reduce total cost. |

| Query | A request for data results (reads) or to perform operations like inserting, updating, or deleting (writes) on a database. |

| Schema | In Unity Catalog, a logical grouping of tables, views, and other data assets within a catalog, often analogous to a database. |

| Stream Processing | A method of processing data as soon as it arrives. It is used for real-time analytics and is more expensive than batch processing. |

| Three-Level Namespace | The hierarchical structure used by Unity Catalog to organize and access data: catalog.schema.table. |

| Time Travel (Delta Lake) | A feature of Delta Lake that allows users to query previous versions of a table using a version number or a timestamp. |

| Update Mode (Streaming) | An output mode for streaming queries that only writes updated rows to the sink. |

| View | A read-only object defined by a query over one or more tables. It does not store data itself but registers the query text to the metastore. |

| Volume (Unity Catalog) | A logical volume of storage in a cloud location, governed by Unity Catalog, used to manage non-tabular data sets (e.g., files). |

| Workspace | The central user interface in Databricks where users interact with assets like clusters, notebooks, jobs, and repos to build and manage data pipelines. |